-

CNN : NN인데 image에 특화했다.

-

back propagation

-

convnet -> conveolutional layer, relu, pooling layer, fully connected layer

-

image가 가진 특징만 뽑아?

-

trend towards smaller filters and deeper architectures

-

trend towards getting rid of POOL/FC layers

-

conv layer and pool layer

-

set of pixel을 가지고 weight를 준다

-

mpeg, jpeg 압축알고리즘을 보면 화면을 블럭단위로 계산하니까

-

마찬가지로 정한 pixel의 값이 크게 바뀌지 않으니까. 그걸로 optimize하자

-

regular 3-layer Neural network vs ConvNet

-

Convolution Layer

-

image 32x32x3 image, -> 5x5x3 filter

-

convolve the filter with the image

-

3d: width height depth

-

32x32x3 image, 5x5x3 filter w

-

W^Tx _ b

-

activation map: convolve(slide) over all spatial locations

-

number of filter is also hyperparameter

-

stack up activation maps

-

32x32x3 image -> 5x5x3 filter -> 28x28 activation map

-

6 filters produce 6 independet activation map

-

example 7x7 image -> 3x3 filter

-

5x5 output

-

hyper parameter: size of filter, stride,

-

7x7 input, 3x3 filter, 2 stride,

-

if stride 3, won’t fit -> cannot apply 3x3 filter on 7x7 input

-

0 padding ->

-

which filter get?

-

fitler도 learning의 대상, Filter가 Weight값이다.

-

Random initialze하고 back propagation해서 learn한다

-

data preprocessing도 learning한다.

-

example of learning (N-F)/stride + 1

-

w1h1d1 -> F -> w2h2d2

-

fx, sx, px - filter, stride, zeropadding

-

Pooling Layer

- make the presenatations smaller and more manageable

- operates over each activation map indendently

-

Max pooling

- 4x4 -> 2x2 filter:2 stride:2

- max pooling: get the biggest number

- trend not to use it

- polling -> only to reduce the computation?

-

small stride first, large stride later

-

Pooling Layer

-

앙상블,

Posted on:December 13, 2016 at 12:00 AM

Deep Learning Study Ch#04

Popular

Latest

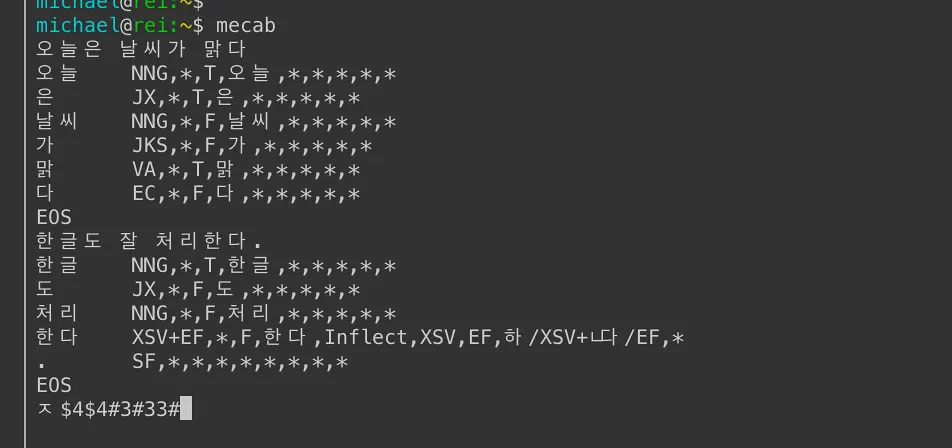

Mecab ko와 은전한닢 프로젝트 사전 설치하기

Sep 10, 2023

Accessing Google Cloud SQL from Local Machine with Cloud SQL Auth Proxy

Jun 26, 2023

How to remove "the gcp auth plugin is deprecated in v1.22+"

May 12, 2023

How to run Alpaca.cpp

Mar 27, 2023

Direnv with .env file

Jan 4, 2023

Securing Mongo DB

Jan 2, 2023

Postgres Useful Tips

Aug 27, 2022

Tag Cloud

c

svn

python

3d

ruby-on-rails

machine-learning

kubernetes

webgl

opengl

mfc

mysql

postgres

trac

dreamhost

wipi-c

gdc

php

subversion

hlsl

shader

docker

gcp

cpp

giga

siggraph

webvr

cg

glsl

syntax-highlight

visual-studio

cgfx

mac

unity3d

maya

ui

nlp

react

redis

parquet

ubuntu

sk-telecom

unity

mobile

jquery

high-performance-computing

digital-content-creation

google-maps

rabbitmq

argo

snowflake

data-engineering

big-data

reactjs