TRAINING SCHEDULE

9:00 - 10:00 am - Redis

9:45 - 11:15 am - Redis as a Primary Data Store

11:25 - 12:55 pm - Redis + K8s

1:55 pm - 3:25 pm - Deep Learning with Redis

3:35 pm - 5:05 pm - Streaming Architectures

EVENT INFORMATION

Redisconf 2019

Training Day: April 1, 2019

Conference Days: April 2 - 3, 2019

VENUE

Pier 27

The EmbarcaderoSan Francisco, CA 94111

PRESENTED BY

Redis Labs, Inc

[email protected]

0900..1000 Redis Introduction

- Salvatore Sanfilippo https://github.com/antirez

- RDB, AOF -> files, persistent

redis-cli

> ping

pong

telnet localhost 6379

> ping

+PONG

> SET mykey somevalue

> GET mykey

"somevalue"

- key1.2.3 -> map to complex data structure

- flat key-value space

- value are more complex: list, hash, …

> set myobject:1 "ksdfsd dsf sdf sdfsdf sdf "

> get myobject:1

1) hash

redis hmset: key-value type data

> hmset myobejct:100 user antirez id 1234 age 42

> hgetall myobject:100

1) "user"

2) "antirez"

3) "id"

4) '1234"

5) "age"

6) "42"

> hget myobject:100 id

"1234"

> hget myobject:100 user

"antirez"

2) list

> rpush mylist 1

> rpush mylist 2

> rpush mylist 34 5 6 7

> lrange mylist 0 -1

1) "1"

2) "2"

...

7) "7"

> lpush mylist a b c

> lrange mylist 0 -1

1) "c"

2) "b"

3) "a"

4) "1"

...

11) "7"

> lpop mylist

"c"

> lpop mylist

"b"

> rpop mylist

"7"

> rpop mylist

"6"

> lrange mylist 0 -1

1) "1"

2) "2"

3) "3"

4) "4"

> RPOPLPUSH mylist mylist

"4"

> lrange mylist 0 -1

1) "4"

2) "1"

3) "2"

4) "3"

> RPOPLPUSH mylist mylist

"3"

> lrange mylist 0 -1

1) "3"

2) "4"

3) "1"

4) "2"

- rpoplpush is really good

> set object:1234 foo

> set object:1234 bar

> get object:1234

"bar"

- to retain the history

> del object:1234

> lpush object:1234 foo

> lpush object:1234 bar

> larnge object:1234 0 0

"bar"

> lrange object:1234 0 -1 # to see the history

1) "bar"

2) "foo"

> ltrim object:1234 0 1

> lrange object:1234 0 -1

1) "foo"

> lpush A username:239:salvatore

> lpush B username:239:salvatore

> lpush A username:03232:anna

> lrange A 0 -1

1) username:093:anna

2) username:239:salvatore

> lrange B 0 -1

1) "username:230:salvatore"

3) sorted set

- good for leader board

> zadd myzset 1.2 A 3.2 B 5 C # element-value

> zrange myzset 0 -1

1) "A"

2) "B"

3) "C"

> zadd myzset 0.5 C

> zrange myzset 0 -1

1) "C"

2) "A"

3) "B"

> zrange myzset 0 -1 WITHSCORES

1) "C"

2) "0.5"

3) "A"

4) "1.2"

5) "B"

6) "3.2"

> del myzset

> zadd myzset 100 antirez 5 trent 2000 anna

> zrange myset 0 -1 WITHSCOPE

1) "trent"

2) "5"

3) "antirez"

4) "100"

5) "anna"

6) "2000"

> ZRANK myzset anna

2

> ZRANK myzset trent

0

> ZRANK myzset antirez

1

- pop the element

> zpopmin myzset

1) "trent"

2) "5"

> zpopmin myzset

1) "antirez"

2) "100"

> zpopmin myzset

1) "anna"

2) "2000"

> zpopmin myzset

(nil)

1000..1115 Redis as a Primary Data Store

- Kyle Davis

- appsembler -> using their platform for test

Overview & Introduction

- redis can be your primary database not just as a cache

- reids is your single source of truth

- redis is your operational database

- database/datastore for disaster recovery

- this requires:

- proper configuration of redis

- suitable data

- proper data structuring

- Single Source of Truth

- you write and read from redis directly

- there is no database that defines what is true to your application

- you may have other methods to define truth for archival or non-working set

- data shouldn’t be operated on from more than one place

- Advantages:

- Speed

- Low application complexity

- Low architectural complexity

- Disadvantages:

- Mistrust

- Data is not always suitable

Transaction

- ACID transaction

- Atomicity Consistency Isonation Durability

- redis is single threaded

- Signle Client - Execution flow

- two client - execution flow

- multiple command transaction

- MULTI to start transaction block

- EXEC to close transaction block

- commands are queued until exec

- all commands or no commands are applied

- transaction

> MULTI

> sadd site:visitors 125

> incr site:raw-count

> hset sessions:124 userid salvatore ip 127.0.0.1

> EXEC

- discard example

> DISCARD

- transa ction with errors - syntatic error

> EXEC

(error)EXECABORT Transaction discarded because of previous errors.

- transaction with errors - semantic error

> EXEC

- conditional execution/ optimistic concurrency control

- use WATCH, UNWATCH

- dependent modifications - incorrect version

- dependent modifications - correct version

python code

tx = r.pipeline()

tx.watch(debitkey) <== **

tx.multi()

tx.hincrbyfloat

tx.execute()

except WatchError: <== **

# watched 'balance' value had changed

# so retry

- disk based persistence - options

- RDB = snapshot: store a compact point-in-time copy every 30m, hourly

- AOF = append only files: write to disk(fsync) every second or every write -tunable

- RDB Persistence

- Persistence

- fork redis rpocess

- child process wirtes new RDB file

- atomic replace old RDB file with new

- Trigger manually

- SAVE command(synch)

- BGSAVE(background)

- Persistence

- AOF Presisteance

- configuration

- appendonly directly (reids.conf): APPENDONLY YES

- runtimeL CONFIG SET APPENDONLY YES

- AOF FILE fsync options

- trace off speed for data security

- options: none, every second, always

- BGREWRITE

- configuration

- Redis enterprise durability - persistence topologies

- master replica, writing from replica is slow

- master replica, wrting from master is faster but still slow, tunable

- Transactions Summary

- no rollbacks

- rollback- free transcations, different beast from rollback transactions

- atomic

- isolation, consisteency

- durability

- transaction work differently than other databases, archives the same goals

- single threaded event-loop for serving commands

- WATCH for optimistic concurrenty control

Data Structuring

- hands on

- reids is a building block

- Ordered data

- each row is a hash

- rows can be accessed by a key

- hashes manage any type of ordering or index

- Add an item

- with sorted set and hashes

MULTI

HSET people:1234 first kyle last davis birthyear 1981

ZADD people:by-birthyear 1981 people:1234

EXEC

- MULTI/EXEC clustering trade-off

- Read an item

HGETALL people:1234

# HGET people:1234

# HMGET people:1234

- LIST items

ZRANGEBYSCORE people:by-birthyear 1980 1990

1) people

...

> HGETALL people:1234

- lua is redis’s built in script language

- UPDATE item

MULTI

HSET people:1234 birthyear 1982

ZADD people:by-birthyear 1982 people:1234

EXEC

- DELETE item

MULTI

UNLINK people:1234

ZREM people:by-birthyear people:1234

EXEC

Clustering, keys & transactions

- 4 Shard/ Node Cluster

- MULTI/EXEC

- something could die during transaction going to different shards

- how to prevent this

- Data layout - isolated data

- data assoicated with one company/account/etc

- operationally, you may not need any non-isolated access

- Keep all the data together with key “tags” using curly braces

c:{xyz}:people:1234

c:{xyz}:people:by-birthyear

# hash('xyz') = n => will alwyas go to the same shard

- Without MULTI/EXEC and non-isolated data

- trade offs and complexity but still possible

- non-critical data/application

- always manipulate your data first

- hset/unlink/del/etc

- then manipulate index

- your application needs to be able to handle missing data and incorrect indexes

- possible index cleanup tasks with background SCAN commands

- always manipulate your data first

- event pattern

- data changes are written as a series of changes adding to a stream

- indexes are gneerated

- Easier with “RediSearch”

# init

FT.CREATE people-index SCHEMA birthyear number last text first text

# add

HSET people:1234 birthyear 1881 last davis first kyle

FT.ADDHASH people-index people:

# update

# add

FT.GET

# LIST

FT.SEARCH people-index "@birthyear:[1980 1990]

# DELETE

FT.DEL

- redis search takes care of the clustering*!

- you don’t have to worry about it

- clusering is only availble on enterprise edition

Examples:

- Data suitablitiy / Non-relational data

- can your data be primariliy referenced by a key?

- Does your data’s structure vary widely?

- How normalized is your data?

- Is your data naturally isolated by particular

- Denormalized Data

- data is stored the way it will be retrived

- Breaks the rule of repeated data

- lower compelxity

- Bad example: Higly isolated data

- student record system at a universy

- One screen that displays

- highly relational -> don’t build it with redis

- Good example: Student communication Portal

1130..0100 Redis + K8s

- Redisconf2019 google group

- email [email protected] and will add you https://groups.google.com/forum/#!forum/redisconf2019

- Why?

- Brendan Burns: k8s founder, kubecon 15’ keynote

- “We’re making reliable, scable, agile distributed systems a CS101 exercise”

- How?

- Kubernetes speeds up the development process by:

- making deployments easy

- automating deployments

- simplifying updates/upgrades

- What?

- container orchestration cloud platform

- https://github.com/kubernetes/kuberneetes

- CNCF

- GKE, Redhat openshift, pivotal container service, azure container service

- Where?

- 54% of fortune 500 companies - business insider 2019, Jan

K8s concepts

- what k8s can do for me? workloads.

- run a workload consisting fo docker containers

- manage a cluster of many nodes (aka hosts, instances)

- spread the workload across the nodes intelligently

- dispatch incoming traffic to the relevant backend (ingress)

- what k8s can do for me? management

- monitor services and restart them as needed

- manage scaling up/down services

- manage deployment of new versions in various ways

- how does it owrk?

- api/ master nodes/ worker nodes

- inptu by kubectl/ webui

- master nodes/ api server, scheduler, controllers, etcd

- workder nodes: pods, docker, kubelet, kube-proxy

kubectl get pods

kubectl apply -f pod-redis.yaml

kubectl get pods -o wide

# in yaml

command: ['sleep', 'infinity']

kubectl apply -f pod-client.yaml

kubectl get pods

kubectl get -it redis bash

# inside of the pod

ps

cat /proc/1/cmdline

redis-cli

keys *

set hello world

exit

kubectl exec -it client bash

# need to know redis address

kubectl get pods -o wide

# read IP, 10.42.2.117

kubectl exec -it client bash

redis-cli

commad not fine

nc -v 10.40.2.117 6379

get hello

world

vi service-redis.yaml

kubectl apply -f service-redis.yaml

vi pod-redis.yaml

# add labels: app: redis

kubectl apply -f pod-redis-labels.yaml

kubectl exec -it client bash

nc -v redis 6379 # without ip address

redis.default.svc.cluster.local [10.43.253.180] 6379(?) open

get hello

world

kubectl delete all --all --grace-period

vi deployment-redis.yaml

kbuectl get po

kubectl get events

kubectl create -f deployment-redis.yaml

kubectl scale deployment redis --replicas 3

kubectl get po

kubectl scale deployment redis --replicas 1

vi deployments-redis.yaml

# upgrade from 5.0.3 to 5.0.4

# change the version to 5.0.4

kubectl apply -f deployment-redis.yaml

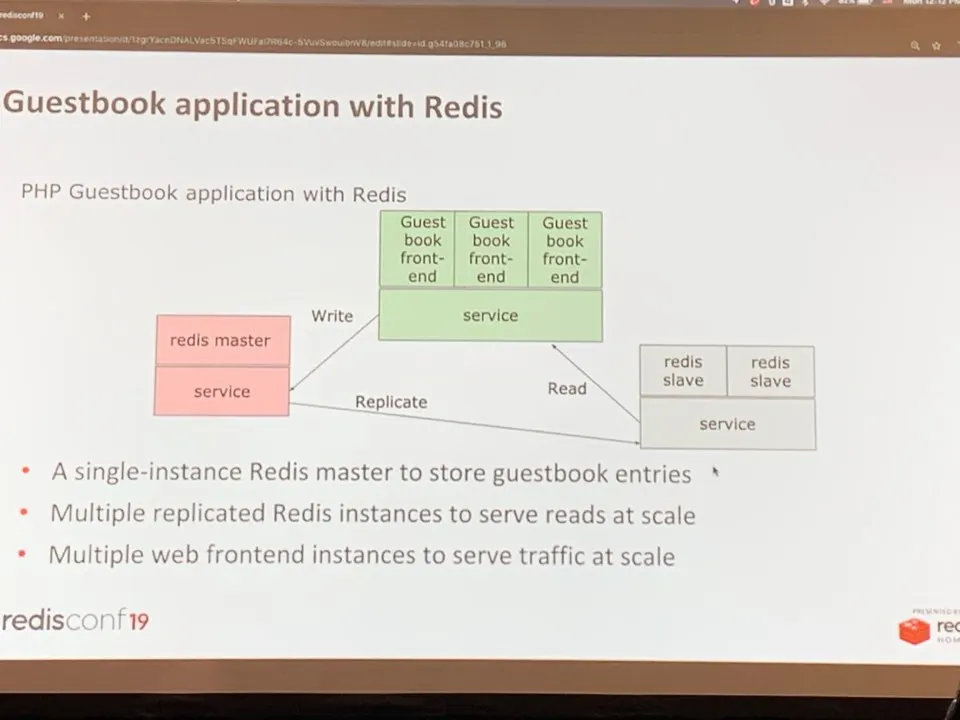

Workshop: Guestbook demo on github

- https://github.com/amiramm/guestbook-demo

- workshop summary

- keploy k8s cluster on GCP

- create new namespace

- create redis master deployment

- deploy a redis master

- deploy redis slaves

- deploy guestbook application fronetend and expose to internet

- Guestbook application with redis

- PHP guestbook with redis

- PHP guestbook with redis

- https://console.cloud.google.com

gcloud beta container clusters get-credentials standard-cluster-1 --region us-west1 --project proven-reality-226706

# turn off tmux settings

# activate cloud shell

git clone https://github.com/amiramm/guestbook-demo

# create namespace

kubectl get namespaces

vi guestbook-demo/namespace.json

# metadata

labels: name: "amizne"

kubectl apply -f guestbook-demo/namespace.json

kubectl get namespaces --show-labels

kubectl config view

# need context and user

kubectl config current-context

kubectl config set-context amizne --namespace=amizne --cluster=gke_redisconf19-k8s-001_us-west1_standard-cluster-1

kubectl config use-context amizne

watch -n 5 kubectl get pods

kubectl apply -f guestbook-demo/redis-master-deployment.yaml

Operator

- customer resource definition

kind: CustomerResourceDefinition

spec:

group: appr.redislab.com

- for redis enterprise customers

- Custom Resource + Customer Controller = Operator

- Stateful Set Controller

- Redis Enterpirse Cluser (REC) Controller

- can upgrade Redis Enterprise

kubectl get crd

kubectl apply -f crd.yaml

kubectl get all

kubectl apply -f redis-enterprise-cluster.yaml

- What operator Gives You

- life cycle control

- simple configuration/reconfiguration

- simple deployment

- k8s native solution

- automates complex operations

0200..0330 Serving Deep Learning Models at Scale with RedisAI

- Deep Learning with Redis

- Luca Antiga, [tensor]werk, CEO

- Orobix co-founder, PyTorch contributor, Co-author of Deep Learning with PyTouch

- Agenda

- deep learning basics

- RedisAI: architecture, api, operation

- Hands-on: image recognition and text generation

- Appsembler: virtual host

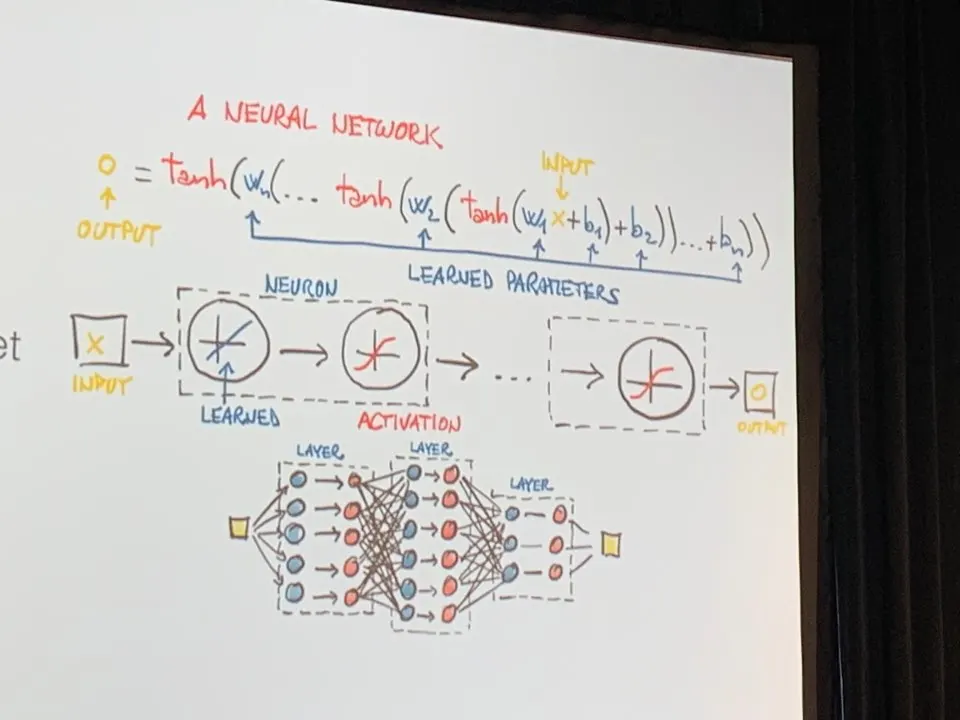

Deep learning basics

- The era of data-driving

- program whose behiviour is defined by data

- new era for software development

- needs to leverage on best practices of software engineering

- from data scientists to developers

- Deep learning

- deep neural networks approximate functions based on examples

- Pervasive

- Solves problems that looked very hard a few years back

- Limit is the data, not the programmers(kinda)

- Not AGI, but a tool with superpowers

- image with ladies selling vegetables => Vision deep CNN => Laguage generating RNN => A group of people shopping at outdoor market, There are many vegetables at the fruit stand.

- Deep learning frameworks

- Tensorflow, PyTouch, mxnet, CNTK, Chainer, DyNet, DL4J, Flux

- once the model is trainined, run it on production

- Production (ONUX, JIT, Tourchscript)

- Deep Learning 101 - Tensors

- tensor = multi-dimentional array

- scalar 0d

- vector 1d

- matrix 2d

- tensor 3d..

- the main data structure in deep learning

- multidimensional arrays: arrays that can be indexed using more than one index

- memory is still 1D

- it’s just a matter of access and operator semantics

- Deep Learning 101 - Models

- operation on tensors to produce other tensors

- operations are layed out in a comutation graph

- a graph is like a VM IR with an op-set limited to tensor ops

- E.g.

- linear transformation(w*x + b)

-

- non-linearity: gamma(w*x + b) * heaviliy nested

- non-linearity: gamma(w*x + b) * heaviliy nested

- Deep Learning 101 - Learning

- Optimizing weights

- So you’ve got your model trained

- Now what?

- Production strategies

- Python code behind: e.g. Flask

- Execution service from cloud provider

- Runtime

- tensorflow serving

- clipper

- nvidia tensorRT inference server

- MNNet Model server

- Bespoke solutions(C++, ..)

- jupyter, flask, python, docker

- Model server architecture

- inception V3 model file

- model server

- REST/RPC wht’as in this image -> panda(0.89)

- https://medium.com/@vikati/the-rise-of-the-model-servers-9395522b6c58

- Production requirements

- must fin the technology stack

- not just about laugages, but about semantics, scalability, guarantees

- run anywhere, any size

- composable building blocks

- must try to limit the amount of moving parts

- and the amount of moving data

- must make best use of resources

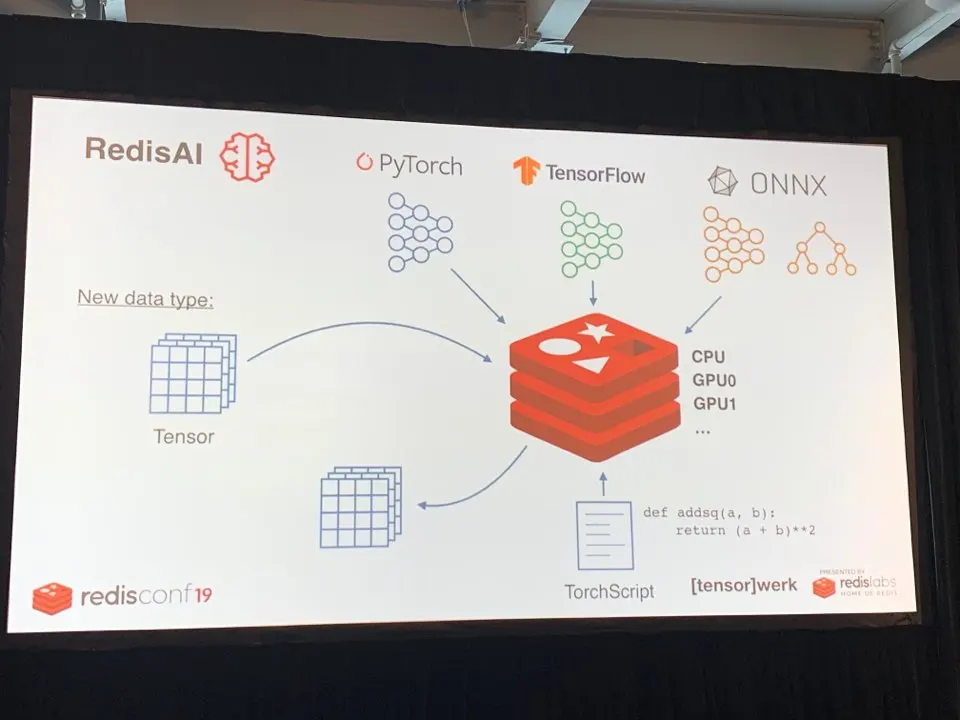

- RedisAI

- a redis module providing

- tensors as a data type

- and depp learning model execution

- on cpu and gpu

- it turns redis into a full-fledged depp learning runtime

- while still being redis

- new data type: * tensor

- ONNX is a standard format for neural network

- ONNX-ML: just machine learning

- TorchScript

def addsq(a, b): return (a + b)**2 - Archituecure

- tensor: framework-agnostic

- queue + procesing theread

- backpressure

- redis stays reponsive

- models are kept hot in memory

- client blocks

- Where to get it

- https://redisai.io

- https://github.com/RedisAI/RedisAI

- go read license

$docker run -p 6379:6379 -it -rm redisai/redisai

or

git clone [email protected]:RedisAI/RedisAI.git

bash get_deps.sh

mkdir build && cd build

cmake -DDEPS_PATH=../deps/install .. && make

cd ..

redis-server --loadmodule bild/redisai.so

- git LFS support

- installs cuda

- set up some envs

bash get_deps.sh cpu # cpu only

LD_LIBRARY_PATH=`pwd`/deps/install/libs redis-server --loadmodule build/redisai.io

-

API: Tensor

- AI.TENSORSET

- AI.TENSORGET

AI.TENSORSET foo FLOAT 2 2 VALUES 1 2 3 4 AI.TENSORSET foo FLOAT 2 2 BLOB < buffer.new AI.TENSORGET foo BLOB AI.TENSORGET foo VALUES AI.TENSORGET foo META -

API: Model

- AI.MODELSET

AI.MODELSET resnet18 TORCH GPU < foo.pt AI.MODELSET resnet18 TF CPU INPUTS in1 OUTPUTS linear4 < foot.pt-

to load TF graph, use Netron

-

https://www.codeproject.com/Articles/1248963/Deep-Learning-using-Python-plus-Keras-Chapter-Re

-

AI.MODELRUN

AI.MODELRUN resnet18- how to export models

- tensorflow(+keras) : freeze graph

-

API: Script

- AI.SCRIPTSET

- AI.SCRIPTRUN

AI.SCRIPTSET mysadd2 GPU < addtwo.txt AI.SCRIPTRUN myadd2 addtwo INPUTS foo OUTPUTS BARaddtwo.txt

def addtwo(a, b): return a + b -

Scripts?

- SCRIPT is a TorchScript interpreter

- Python-like syntax for tensor ops

- on CPU and GUP

Hands-on

- create two 3x3 tensors

- create SCRIPT that performas element-wise multiply

- set it

- run it

# make mymul.txt

def mymul(a, b):

return a*b

# run redis-cli

AI.TENSORSET foo FLOAT 2 2 VALUES 1 1 2 2

AI.TENSORGET foo VALUES

AI.TENSORGET foo BLOB

AI.TENSORSET bar FLOAT 3 3 VALUES 2 2 2 2 2 2 2 2 2

AI.SCRIPTSET baz CPU ""

# from outside to load script

redis-cli -x AI.SCRIPTSET baz CPU < mul.txt

# after loading

AI.SCRIPTRUN baz mymul INPUTS foo bar OUTPUTS jar

AI.TENSORGET jar values

- go to https://github.com/RedisAI/redisai-examples

- image recognition

- Hands-on

- will run two hands-on demo

- image recognition (with thensorflow and pytourch)

- text generation (with pytorch)

- using nodejs ans the client and pre-train

wget https://s3.amazonaws.com/redisai/redisai-examples.tar.gz

tar -xvfz redisai-exampels.tar.gz

cd redisai-examples/js_client

npm install jimp ioredis

0340..0450 Streaming Architectures

- Redis Streams

- were introduced in redis v5

- necessity is the mother of invention

- are (yet another) redis data structure <- you can store data in it!

- are a storage absraction for log-like data

- can also provide communication between processes

- are the most complex/robust data redis structure(to date)

- What is a stream?

- append-only lis of entries called messages

- messages are added to a stream by “producers”.

- every message has an ID that uniquely identifies it, embeds a notion of time and is immutable.

- order of the messages is imuutable

- a messsage contains data is a hash-like (field-value pairs) structure

- data is immutable, but messages can be deleted from the stream

- messges can be read from the stream by id or by ranges

XACK

XADD

XCLAIM

XDEL

XGROUP

XINFO

XLEN

XPENDING

XRANGE

XREAD

XREADGROUP

XREVRANGE

XTRIM

Hands on

- sample code: https://github.com/itamarhaber/streamsworkshop

127.0.0.1:6379> keys *

(empty list or set)

127.0.0.1:6379> xadd mystream * foo bar

"1554159172959-0" # millisecret

127.0.0.1:6379> xadd mystream * foo bar

"1554159184276-0"

127.0.0.1:6379> 5 xadd s1 * foo bar # if you add number in front, it runs that times

"1554159364534-0"

"1554159364535-0"

"1554159364583-0"

"1554159364583-1" # same milliseconds, sequences

"1554159364583-2" # same milliseconds, sequences

> 500 xadd mystream * foo bar

127.0.0.1:6379> xlen mystream

(integer) 507

127.0.0.1:6379> xtrim mystream maxlen ~ 123

(integer) 303

127.0.0.1:6379> xlen mystream

(integer) 204

streamsworkshop$ python producer_1.py

1554159899.914: Stream numbers has 0 messages and uses None bytes

1554159899.915: Produced the number 0 as message id 1554159899914-0

1554159900.917: Stream numbers has 1 messages and uses 581 bytes

1554159900.917: Produced the number 1 as message id 1554159900916-0

1554159901.919: Stream numbers has 2 messages and uses 592 bytes

redis-cli flushall

- code: producer_2.py

127.0.0.1:6379> XINFO STREAM numbers

1) "length"

2) (integer) 47

3) "radix-tree-keys"

4) (integer) 1

5) "radix-tree-nodes"

6) (integer) 2

7) "groups"

8) (integer) 0

9) "last-generated-id"

10) "1554160089688-0"

11) "first-entry"

12) 1) "1554160043587-0"

2) 1) "n"

2) "0"

13) "last-entry"

14) 1) "1554160089688-0"

2) 1) "n"

2) "46"

127.0.0.1:6379> XRANGE numbers - + COUNT 5

1) 1) "1554160043587-0"

2) 1) "n"

2) "0"

2) 1) "1554160044589-0"

2) 1) "n"

2) "1"

3) 1) "1554160045591-0"

2) 1) "n"

2) "2"

4) 1) "1554160046593-0"

2) 1) "n"

2) "3"

5) 1) "1554160047595-0"

2) 1) "n"

2) "4"

127.0.0.1:6379> XRANGE numbers 1554160047595-0 + COUNT 5

1) 1) "1554160047595-0"

2) 1) "n"

2) "4"

2) 1) "1554160048598-0"

2) 1) "n"

2) "5"

3) 1) "1554160049600-0"

2) 1) "n"

2) "6"

4) 1) "1554160050602-0"

2) 1) "n"

2) "7"

5) 1) "1554160051604-0"

2) 1) "n"

2) "8"

- code: range_1.py

127.0.0.1:6379> XRANGE numbers 1554159977896-2 1554159977896-2

(empty list or set)

- code: range_sum.py

streamsworkshop$ python range_sum.py

The running sum of the Natural Numbers Stream is 9045 (added 135 new numbers)

streamsworkshop$ python range_sum.py

The running sum of the Natural Numbers Stream is 9045 (added 0 new numbers)

127.0.0.1:6379> hgetall numbers:range_sum

1) "last_id"

2) "1554160177886-1"

3) "n_sum"

4) "9045"

127.0.0.1:6379> XREAD STREAMS numbers 0

1) 1) "numbers"

2) 1) 1) "1554160043587-0"

2) 1) "n"

2) "0"

2) 1) "1554160044589-0"

2) 1) "n"---

127.0.0.1:6379> XREAD COUNT 5 STREAMS numbers 1554160177486-0

1) 1) "numbers"

2) 1) 1) "1554160177886-0"

2) 1) "n"

2) "134"

127.0.0.1:6379> XREAD BLOCK 100 STREAMS numbers $

(nil)

- code: blockingread_sum.py

127.0.0.1:6379> XGROUP CREATE numbers grp 0

OK

127.0.0.1:6379> XREADGROUP GROUP grp c1 STREAMS numbers 0

1) 1) "numbers"

2) (empty list or set)

127.0.0.1:6379> XREADGROUP GROUP grp c1 COUNT 1 STREAMS numbers >

1) 1) "numbers"

2) 1) 1) "1554160043587-0"

2) 1) "n"

2) "0"

127.0.0.1:6379> XINFO GROUPS numbers

1) 1) "name"

2) "grp"

3) "consumers"

4) (integer) 2

5) "pending"

6) (integer) 2

7) "last-delivered-id"

8) "1554160044589-0"

127.0.0.1:6379> XINFO CONSUMERS numbers grp

1) 1) "name"

2) "c1"

3) "pending"

4) (integer) 1

5) "idle"

6) (integer) 99060

2) 1) "name"

2) "c2"

3) "pending"

4) (integer) 1

5) "idle"

6) (integer) 60502

127.0.0.1:6379> XACK numbers grp 1554161747918-0

(integer) 0

127.0.0.1:6379> XINFO CONSUMERS numbers grp

1) 1) "name"

2) "c1"

3) "pending"

4) (integer) 1

5) "idle"

6) (integer) 63254

2) 1) "name"

2) "c2"

3) "pending"

4) (integer) 1

5) "idle"

6) (integer) 176549

127.0.0.1:6379> XPENDING numbers grp

1) (integer) 195

2) "1554160043587-0"

3) "1554161864418-0"

4) 1) 1) "c1"

2) "194"

2) 1) "c2"

2) "1"

127.0.0.1:6379> XPENDING numbers grp - + 10

1) 1) "1554160043587-0"

2) "c1"

3) (integer) 194762

4) (integer) 2

...

-

python consumer_group.py

-

reference:

Action item:

- check the modules:

- https://redis.io/modules

- RediSearch, RedisJSON, RedisAI